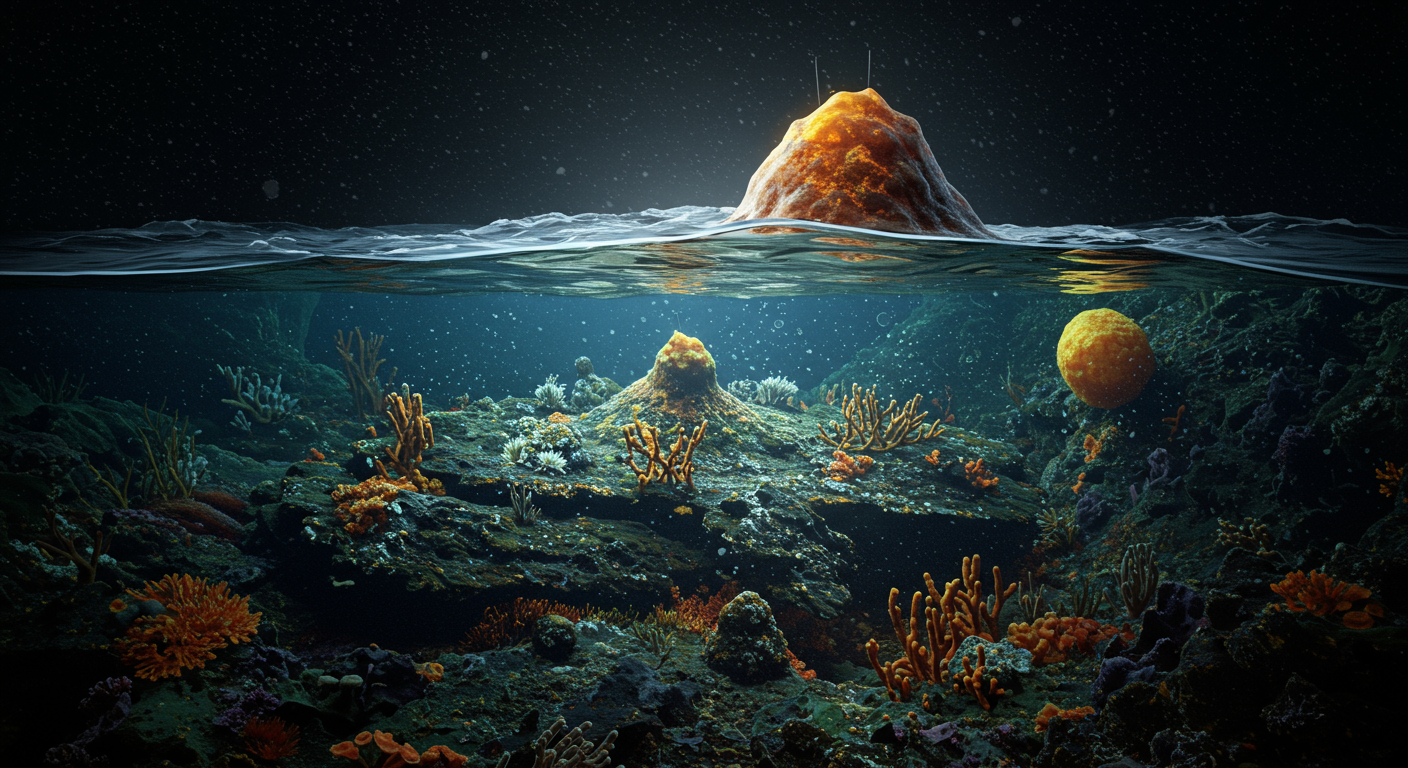

Artificial intelligence is rapidly merging with biotechnology, creating powerful new tools that could, according to national security experts, lower the barrier to developing and deploying biological weapons. Government and private sector leaders are raising alarms that AI-driven systems might soon enable state and non-state actors to design novel pathogens, bypass existing safeguards, and plan biological attacks with greater ease than ever before, posing a catastrophic threat that could exceed the scope of recent pandemics.

While the convergence of AI and biology promises breakthroughs in medicine and agriculture, it carries a significant dual-use risk. Security analysts are focused on how AI, particularly large language models (LLMs) and specialized biological design tools (BDTs), could provide the knowledge and means to create more effective and targeted bioweapons. A recent report from the Center for a New American Security (CNAS) highlights concerns that these advancements could be used to create unprecedented superviruses or pathogens tailored to specific genetic groups. Although some studies indicate that current AI models do not yet measurably increase the operational risk of an attack, the pace of technological change has prompted urgent calls for new, adaptive security protocols to mitigate a speculative but potentially devastating future threat.

A New Era of Biological Threats

The nature of biosecurity risks is being reshaped by artificial intelligence. For decades, the development of sophisticated bioweapons required immense resources and highly specialized tacit knowledge, largely confining the most significant threats to state-level programs. However, AI is poised to democratize this capability, potentially providing individuals or small groups with the expertise needed to overcome long-standing technical hurdles. Experts from the Centre for Long-Term Resilience note that AI tools could impact nearly every step of the bioweapon development process, from the initial malicious intent to the final act of release.

The primary concern is that AI could significantly lower the barrier to entry for would-be bioterrorists. This includes providing detailed instructions, identifying necessary materials, and troubleshooting complex scientific processes that were once the domain of PhD-level scientists. Furthermore, AI could enhance the capabilities of existing state adversaries. The CNAS report warns that AI could be used to optimize bioweapons for more precise and strategic effects, such as increasing their transmissibility or designing them to target specific populations or agricultural systems. This could alter the strategic calculus of nations that have historically viewed bioweapons as too unpredictable for widespread use.

The Role of AI Models and Design Tools

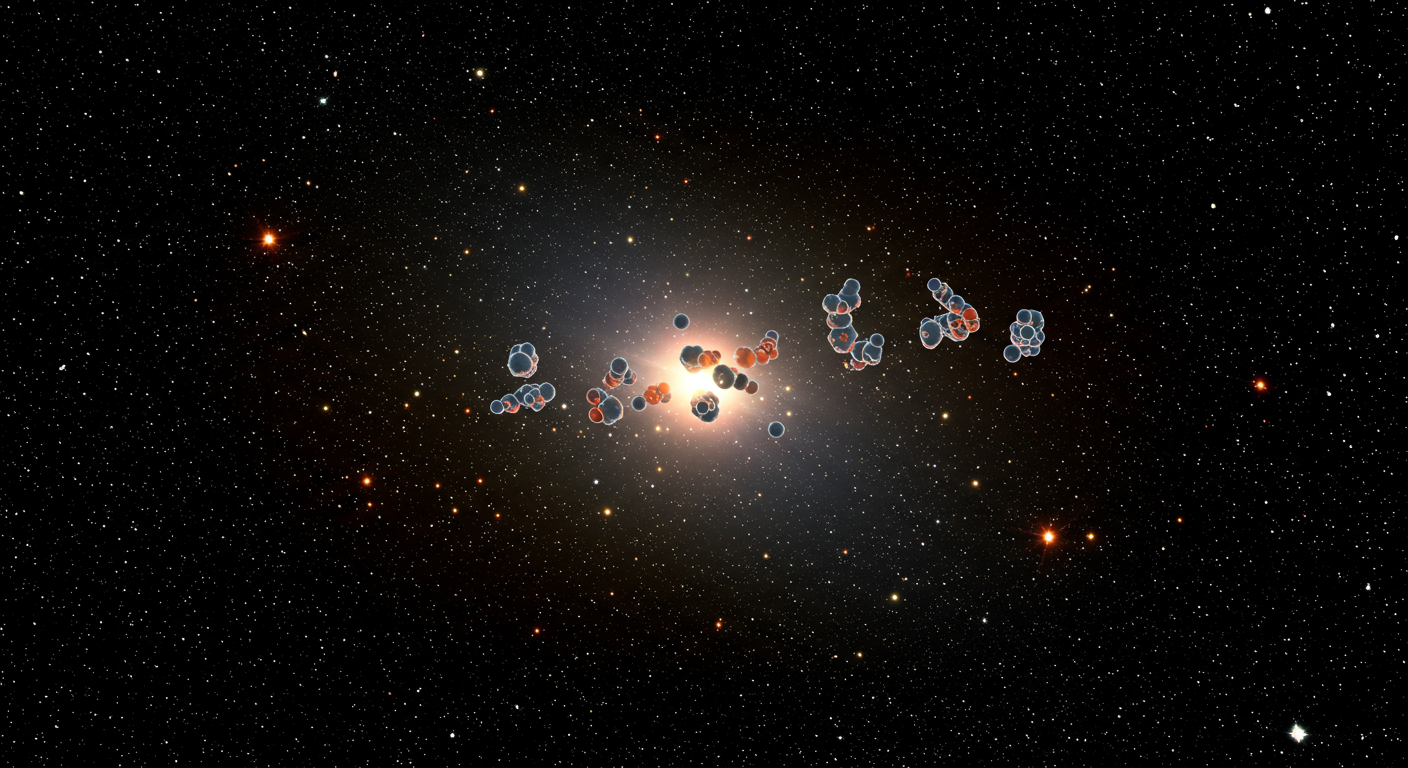

Two main types of AI technology are at the center of the biosecurity debate: large language models and biological design tools. LLMs, the same technology that powers popular commercial chatbots, could soon reduce the informational barriers for planning and executing an attack. AI labs have reported rapid advancements in their models’ abilities to provide information critical to bioweapon development, prompting some to preemptively classify their own systems as “highly capable” in this domain as a precautionary measure.

Beyond general-purpose LLMs, more specialized biological design tools present a more direct risk. These platforms are engineered specifically for life sciences research, capable of designing novel proteins and other biomolecules. While their intended purpose is to accelerate the creation of new medicines and therapies, their capabilities are inherently dual-use. An analysis from the Center for Strategic and International Studies (CSIS) warns that future BDTs could assist malicious actors in producing more harmful or entirely novel pathogens with pandemic potential. The fear is not just that these tools will make existing pathogens easier to acquire, but that they will enable the creation of agents for which humanity has no preexisting immunity or countermeasures.

Assessing the Current Risk Landscape

Red-Team Findings

Despite the high level of concern, recent evidence suggests the most catastrophic risks have not yet materialized. A 2024 red-team study tasked researchers with role-playing as malign actors attempting to plan a biological attack. One group was given access to the internet and an LLM, while the control group had only the internet. The study’s authors found no statistically significant difference in the viability of the attack plans generated by the two groups, concluding that planning a biological attack currently remains beyond the capability frontier of today’s LLMs as assistive tools.

A Rapidly Closing Window

However, the authors of the red-team study and other experts caution against complacency. The field of artificial intelligence is progressing at an exponential rate, and the capabilities of models are expanding with each new generation. The window of opportunity to implement effective safeguards is closing quickly. The CEO of a leading AI lab has raised alarms about the emerging technical capabilities available to bad actors, a sentiment echoed at the highest levels of the U.S. government. The consensus is that while AI may not be an effective bioterrorism assistant today, it is prudent to assume it will become one in the near future and to act accordingly.

Developing Proactive Defense Strategies

Strengthening Screening and Verification

In response to these emerging threats, policy experts are advocating for a multi-layered defense strategy. A key recommendation is to bolster screening mechanisms for cloud-based laboratory services and companies that synthesize DNA and RNA. These commercial services allow customers to order custom genetic sequences online, a process that could be abused to create dangerous viruses. Tighter protocols, potentially using AI-driven systems, could help flag suspicious orders and verify the identity and intent of customers without stifling legitimate scientific research.

Evaluating and Regulating AI Models

Another major focus is the direct governance of the AI models themselves. Security analysts are calling for government and private labs to conduct rigorous assessments of foundation models to understand their biological capabilities and potential for misuse. This involves actively testing models for their ability to provide dangerous information and building in technical safeguards to prevent it. In the long term, some have proposed a licensing regime for the most powerful biological design tools, treating them with the same caution as other technologies with catastrophic potential. This would ensure that only vetted and responsible actors have access to AI systems capable of designing novel biological agents.

Investing in Agile Biodefense

Finally, experts emphasize the need for government investments in biodefense to become more agile and flexible. In an era where AI could be used to create novel, unexpected threats, a static defense focused only on known pathogens is insufficient. Future biodefense systems must be able to rapidly detect, characterize, and respond to previously unseen biological agents. This requires a shift in priorities toward platform technologies, such as mRNA vaccines and broad-spectrum antivirals, that can be adapted quickly in the face of a new threat, ensuring that humanity can keep pace with the evolving risk landscape at the intersection of AI and biology.